« Hyperconverged Supermicro a2sdi-4c-hln4f » : différence entre les versions

mAucun résumé des modifications |

Aucun résumé des modifications |

||

| (44 versions intermédiaires par le même utilisateur non affichées) | |||

| Ligne 1 : | Ligne 1 : | ||

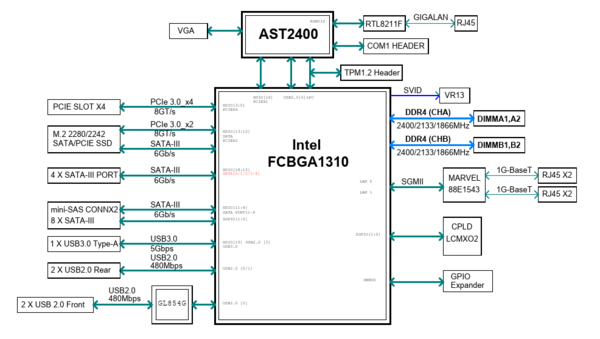

This howto aims at describing the choices and the build of a compact homelab with a hyperconverged chassis based on a [https://www.supermicro.com/en/products/motherboard/A2SDi-4C-HLN4F Supermicro A2SDi-4C-HLN4F]. The hypervisor OS will be | This howto aims at describing the choices and the build of a compact homelab with a hyperconverged chassis based on a [https://www.supermicro.com/en/products/motherboard/A2SDi-4C-HLN4F Supermicro A2SDi-4C-HLN4F] motherboard. The hypervisor OS will be ''Slackware64-15.0'' (with ''Qemu/KVM'' for virtualization), the storage will be provided by a ''Truenas core'' VM (thanks to pci-passthrough) and network orchestrated by an ''OPNSense'' VM. | ||

== Motivations == | == Motivations == | ||

Why such a | Why such a motherboard with a modest 4 cores [https://ark.intel.com/content/www/us/en/ark/products/97937/intel-atom-processor-c3558-8m-cache-up-to-2-20-ghz.html Intel Atom C3558] ? | ||

Let's see the advantages : | Let's see the advantages : | ||

* obviously, it's very compact (mini-itx form factor) | * obviously, it's very compact (mini-itx form factor) | ||

| Ligne 20 : | Ligne 20 : | ||

* no external USB 3.0 ports (ony one "internal", on the motherboard itself) ; this is desirable to plug an external HDD for local backups (USB 2.0 is too slow) | * no external USB 3.0 ports (ony one "internal", on the motherboard itself) ; this is desirable to plug an external HDD for local backups (USB 2.0 is too slow) | ||

== Hardware == | |||

== 2.5 or 3.5 inches drives ? == | === 2.5 or 3.5 inches drives ? === | ||

What is required for this project : | What is required for this project : | ||

* Hypervisor OS on RAID 1 with two drives | * mini tower format chassis | ||

* 4 TB of encrypted data with a minimum of resilience ( | * Hypervisor OS on soft RAID 1 with two drives | ||

* it is recommended not to exceed 80% of the capacity of a ZFS | * at least 4 TB of encrypted data with a minimum of resilience (mirror vdevs or raidz1 / raidz2 ...) | ||

* it is recommended not to exceed 80% of the capacity of a ZFS filesystem, so, for 4 TB of usable data, 5 TB of raw capacity is required | |||

* all disks easily accessible from the front of the chassis | * all disks easily accessible from the front of the chassis | ||

Choosing between 2.5 or 3.5 inches is not so easy. Obviously, for raw capacity over a gigabit network, | Choosing between 2.5 (SFF) or 3.5 (LFF) inches drives is not so easy. Obviously, for raw capacity over a gigabit network, LFF HDD are unbeatable in terms of price. In France (as of march 2021), it costs ~ 80 euros for a 2 TB NAS 3.5 LFF HDD ... Same price for a 1 TB NAS SFF HDD. Moreover, SFF HDD over 2 TB simply doesn't exist for consumer NAS systems (enterprise SFF HDDs exist but are way too expensive). LFF HDD are a clear winner ? What about chassis size, cooling, noise and iops ? | ||

At this point, there are two choices : | |||

* 2 * 500 GB SFF SSD (RAID 1 for hypervisor and VMs OS) plus 2 * 6 TB LFF HDD (mirror vdev storage) | |||

* 2 * 500 GB SFF SSD (RAID 1 for hypervisor and VMs OS) plus 4 * 2 TB SFF SSD (raidz1 storage) | |||

The most reasonable would be to use LFF HDDs but silent operation in a tiny chassis with decent iops are very important, so let's stick with the "unreasonable" configuration ! | |||

=== Cooling the CPU === | |||

As seen earlier, a standard CPU fan cannot be fitted on the heat sink. But we are in the 21st century and 3D printing is available, so why not a custom CPU fan shroud ? ^^ | |||

[[Fichier:Cpu_fan_shroud.jpg|center|thumb|600px|CPU fan shroud]] | |||

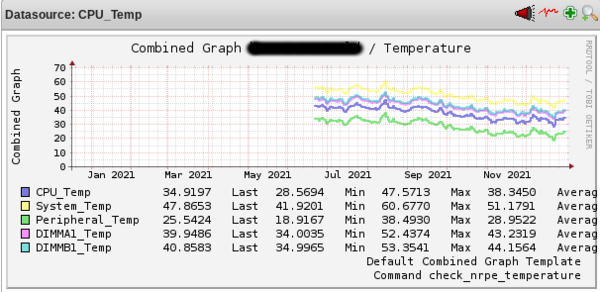

Is it absolutely necessary ? I don't think so. Does it help ? Sure ! On a similar system located in an attic (so hot in summer), I attached the measured temperatures over the last months (see below). No more than 47 °C for the CPU ... Without the fan shroud, I think you should expect overall 5 °C more on average. | |||

[[Fichier:Temperatures.png|center|thumb|600px|Temperatures]] | |||

=== Final system === | |||

* [https://www.chieftec.eu/products-detail/82/CT-01B-350GPB Chieftec mini tower case] | |||

* [https://www.bequiet.com/fr/powersupply/1549 Be Quiet 400W 80plus gold PSU] | |||

* [https://www.supermicro.com/en/products/motherboard/A2SDi-4C-HLN4F Supermicro A2SDi-4C-HLN4F] | |||

* 64 GB DDR4 RAM ECC (4 * 16 GB RDIMM) | |||

* [https://www.icydock.fr/goods.php?id=150 ToughArmor MB992SK-B] for hypervisor and VMs OS with 2 * [https://shop.westerndigital.com/fr-fr/products/internal-drives/wd-red-sata-2-5-ssd#WDS500G1R0A WD SA500] | |||

* [https://www.icydock.fr/goods.php?id=143 ToughArmor MB994SP-4SB-1] for data storage (with fans off) with 4 * [https://www.crucial.fr/ssd/mx500/ct2000mx500ssd1 Crucial MX500] | |||

* [https://www.startech.com/fr-fr/cartes-additionelles-et-peripheriques/pexusb3s25 PCIe card with 2 * USB 3.0 ports] | |||

<gallery mode="traditional" widths=600px heights=600px> | |||

Image:Chassis_inside.jpg|''[[commons:Inside the chassis|Inside the chassis]]'' | |||

Image:Details_in_chassis.jpg|''[[commons:Details|Details]]'' | |||

</gallery> | |||

== Hypervisor installation == | |||

=== BMC setup === | |||

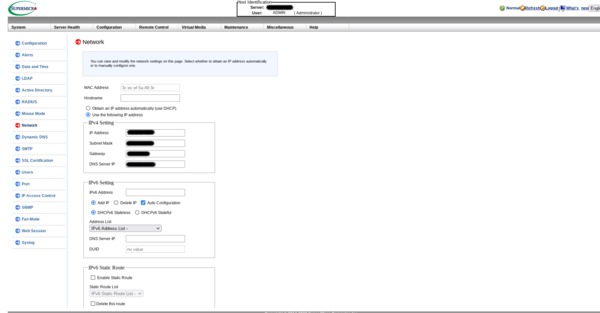

First things first, taking control of the BMC. These days, Supermicro's BMC are configured by default for DHCP and dedicated Ethernet port. There is no more the "ADMIN" default password but a random one written on a sticker directly on the motherboard (along with the MAC address of the BMC). Be sure to remember these informations before plugging the dedicated port on a switch of your your LAN. | |||

With the MAC address in mind, it should be easy to retrieve the IP address assigned by the DHCP server, or (better), you could assign a dedicated IP address to your BMC within the DHCP server. | |||

Then I suggest you configure the BMC with a fixed IP address, not an IP attributed by a DHCP server. If your LAN goes berserk and you have to connect to your BMC, it will be easier. Two choices : through the WebUI (example below) or through the UEFI BIOS. | |||

[[Fichier:Webui_network.png|center|thumb|600px|Webui BMC network configuration]] | |||

With the BMC configured, you have full control of your system through your favorite Web browser. HTML5 web Console Redirection is a fantastic feature not often available for free with other vendors (such as Dell, HPE or Lenovo for example). | |||

=== PXE boot === | |||

It's of course possible to install your favorite OS by booting thanks to a USB stick. Having already an operational homelab, PXE boot is even easier and quicker. With the Supermicro a2sdi-4c-hln4f, there is just one caveat : no legacy boot available, only UEFI (well, the manual and options within the BIOS suggest legacy should be possible but in reality it's not working at all). | |||

Through the UEFI BIOS options, you should verify the boot order or press F12 during the boot of the system and choose ''UEFI Network''. | |||

=== Operating system (slackware64-15.0) === | |||

==== Preparations ==== | |||

Let's assume a Slackware Busybox prompt is available (resulting either from booting with a USB key or through network). The soft RAID 1 has to be configured at this stage, of course on the right disks ! (remember, the system is composed of 6 drives, 2 SSDs for OS and 4 SSDs for data). | |||

The ''lsblk'' command is a good tool to retrive and identify the drives connected to a system. | |||

On the Supermicro a2sdi-4c-hln4f, the 4 drives connected to the mini-SAS port are identified first (sda, sdb, sdc, sdd), the 2 more drives connected to tradtionnal SATA ports come next (sde, sdf). It is on those two drives (sde, sdf) that the soft RAID 1 is going to be configured. | |||

Reference documentation for this kind of setup : | |||

* [http://slackware.mirrors.ovh.net/ftp.slackware.com/slackware64-15.0/README_RAID.TXT README_RAID.TXT] | |||

* [http://slackware.mirrors.ovh.net/ftp.slackware.com/slackware64-15.0/README_LVM.TXT README_LVM.TXT] | |||

* [http://slackware.mirrors.ovh.net/ftp.slackware.com/slackware64-15.0/README_UEFI.TXT README_UEFI.TXT] | |||

I recommend two identical partitions per disk (sde and sdf) such as : | |||

<syntaxhighlight lang="bash"> | |||

# fdisk -l /dev/sde | |||

Disk /dev/sde: 465.76 GiB, 500107862016 bytes, 976773168 sectors | |||

Disk model: WDC WDS500G1R0A | |||

Units: sectors of 1 * 512 = 512 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disklabel type: gpt | |||

Disk identifier: 96BE3679-F270-4EA4-88FF-2935AE4DCB46 | |||

Device Start End Sectors Size Type | |||

/dev/sde1 2048 534527 532480 260M EFI System | |||

/dev/sde2 534528 976773134 976238607 465.5G Linux RAID | |||

</syntaxhighlight> | |||

When you're done with one disk (e.g. ''sde''), you can clone the partionning to another disk (e.g. ''sdf'') with these commands : | |||

<syntaxhighlight lang="bash"> | |||

sgdisk -R /dev/sdf /dev/sde # clone | |||

sgdisk -G /dev/sdf # set a new GUID | |||

</syntaxhighlight> | |||

Let's build the soft RAID 1 : | |||

<syntaxhighlight lang="bash"> | |||

mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sde2 /dev/sdf2 | |||

</syntaxhighlight> | |||

Checking disk synchronization : | |||

<syntaxhighlight lang="bash"> | |||

watch -n2 cat /proc/mdstat | |||

</syntaxhighlight> | |||

Saving the RAID configuration for system boot : | |||

<syntaxhighlight lang="bash> | |||

mdadm --examine --scan > /etc/mdadm.conf | |||

</syntaxhighlight> | |||

Using LVM afterwards is a good option : | |||

<syntaxhighlight lang="bash> | |||

pvcreate /dev/md0 | |||

vgcreate <MY_VOLUME> /dev/md0 | |||

lvcreate -L+1g -nswap <MY_VOLUME> | |||

lvcreate -L+4g -nroot <MY_VOLUME> | |||

... | |||

</syntaxhighlight> | |||

Then you can launch the famous Slackware ''setup'' command and proceed through the OS installation itself. Don't forget to create an ''initrd'' (thanks to ''mkinitrd_command_generator.sh'' which should choose the right options) and be sure to use ''elilo'' (or ''grub'') as a bootloader. | |||

Here is my ''elilo.conf'' as an example : | |||

<syntaxhighlight lang="bash> | |||

chooser=simple | |||

delay=1 | |||

timeout=1 | |||

# | |||

image=vmlinuz | |||

label=vmlinuz | |||

initrd=initrd.gz | |||

read-only | |||

append="root=/dev/system/root vga=normal ro consoleblank=0 transparent_hugepage=never hugepagesz=1G default_hugepagesz=1G hugepages=60 intel_iommu=on iommu=pt libata.allow_tpm=1 preempt=none" | |||

</syntaxhighlight> | |||

Little explanations : | |||

* ''transparent_hugepage=never hugepagesz=1G default_hugepagesz=1G hugepages=60'' : 60 hugepages of 1GB of RAM reserved for virtualization | |||

* ''intel_iommu=on iommu=pt'' : recommanded option for PCI devices passthrough | |||

* ''libata.allow_tpm=1'' : [https://github.com/Drive-Trust-Alliance/sedutil/blob/master/README.md mandatory option for SATA SED disks] (more on that later) | |||

* ''preempt=none'' : disable kernel preemption (only really useful for desktop systems) | |||

==== Booting ==== | |||

It's the tricky part ... Supermicro does not allow a command such as ''efibootmgr'' to insert or modify boot options in the UEFI subsystem ([https://www.supermicro.com/support/faqs/faq.cfm?faq=27004 as mentioned in a Supermicro FAQ]) ... At least if you are using an unsigned Linux kernel and no secure boot. It's technically feasible with Slackware ([https://docs.slackware.com/howtos:security:enabling_secure_boot as explained here]) but I never tested it. | |||

I scratched my head a little while on this matter and found [https://gist.github.com/xiconfjs/f66feaaa012a2c80013dafc41e820c2d a solution online] | |||

=== Virtualization layer === | |||

I'm currently using [https://www.qemu.org Qemu] version 6.2.0 along with [https://libvirt.org/ Libvirt] version 8.1.0. Libvirt is optional (especially if you hate XML ^^) but could be useful with a tool such as [https://virt-manager.org/ virt-manager]. | |||

There is no official Slackware packages for Qemu or Libvirt but thanks to [https://slackbuilds.org/ slackbuilds.org], you do not have to start from nothing. | |||

I recommand compiling Qemu with [https://en.wikipedia.org/wiki/Io_uring io_uring] for best I/O performances. The [https://slackbuilds.org/repository/15.0/system/qemu/ slackbuild] will take care of that if the dependency is already installed | |||

== Truenas core == | |||

I'm a big fan of this network storage appliance system. It's robust (based on FreeBSD and ZFS), well maintained, with a solid community. It's also heavy on ressources (especially RAM, count at least 16GB). | |||

There is an endless debate on whether or not to use ECC RAM ... Facts are : | |||

* it's definitely working without ECC | |||

* you can run into cases such as [https://news.ycombinator.com/item?id=22158340 this incident] where ECC RAM prevents your filesystem from being corrupted | |||

Conclusion : use ECC RAM and make backups ^^ | |||

=== Data encryption === | |||

Two alternatives identified : | |||

* native ZFS encryption within Truenas | |||

* [https://en.wikipedia.org/wiki/Hardware-based_full_disk_encryption SED] encryption (Crucial MX500 disks are TCG Opal 2.0 compatible) | |||

SED encryption comes with a caveat in the case of PCI passthrough. Disks are indeed activated when the hypervisor starts up, not the Truenas VM ; and it resulted in SED operations (locking / unlocking) not working as intented in Truenas. I decided to use [https://github.com/Drive-Trust-Alliance/sedutil sedutil-cli] in the hypervisor, before the Truenas VM starts up (disks are automatically locked when powered off). The operation of unlocking the drives is manual but the password isn't stored inside Truenas. | |||

Command to define a password on a new SED drive : | |||

<syntaxhighlight lang="bash"> | |||

sedutil-cli --initialsetup <password> <drive> | |||

</syntaxhighlight> | |||

Script example to unlock the drives : | |||

<syntaxhighlight lang="bash"> | |||

#!/bin/bash | |||

SEDUTIL="/usr/sbin/sedutil-cli" | |||

echo -n "SED password: " | |||

read -s password | |||

echo | |||

if [ ! -x $SEDUTIL ]; then | |||

echo "no sedutil-cli found" | |||

exit 1 | |||

fi | |||

_drives=$($SEDUTIL --scan | awk '$2 == "2" {print $1}') | |||

if [ "x$_drives" = "x" ]; then | |||

echo "no SED drive found" | |||

exit 1 | |||

fi | |||

for _drive in $_drives; do | |||

$SEDUTIL --setLockingRange 0 RW $password $_drive | |||

$SEDUTIL --setMBRDone on $password $_drive | |||

done | |||

</syntaxhighlight> | |||

But why using SED encryption in the first place ? Well, as mentioned earlier, the C3558 is a modest CPU and even if AES-NI instructions are available, I wanted to spare extra cycles. Moreover, I'm not paranoid about data encryption and I think SED is a sweet spot between performance and security. I ran a little bench with ''fio'' to illustrate this : | |||

{| class="wikitable" style="text-align:center;" | |||

|- style="text-align:left;" | |||

! rowspan="3" | | |||

! colspan="2" | RAIDZ (no crypto) | |||

! colspan="2" | RAIDZ (zfs crypto) | |||

! colspan="2" | RAIDZ (SED crypto) | |||

|- style="text-align:left;" | |||

| colspan="2" style="text-align:center;" | VCPUs | |||

| colspan="2" style="text-align:center;" | VCPUs | |||

| colspan="2" style="text-align:center;" | VCPUs | |||

|- | |||

| 2 | |||

| 3 | |||

| 2 | |||

| 3 | |||

| 2 | |||

| 3 | |||

|- | |||

| style="text-align:left;" | sequential write (MB/s) | |||

| 178 | |||

| 264 | |||

| 153 | |||

| 222 | |||

| | |||

| 245 | |||

|- | |||

| style="text-align:left;" | sequential read (MB/s) | |||

| | |||

| 442 | |||

| | |||

| 288 | |||

| | |||

| 436 | |||

|} | |||

More CPU power is needed for SSDs to realize their full potential. But this particular setup cosumes ... 38W at full speed (it's powered on 24/7) and I just need to saturate a 1 Gb/s network. | |||

=== Libvirt XML configuration === | |||

Special points of attention. | |||

* Using huge pages for memory : | |||

<syntaxhighlight lang="xml"> | |||

<memoryBacking> | |||

<hugepages/> | |||

</memoryBacking> | |||

</syntaxhighlight> | |||

* Using io_uring for Truenas boot disk : | |||

<syntaxhighlight lang="xml"> | |||

<disk type='block' device='disk'> | |||

<driver name='qemu' type='raw' cache='none' io='io_uring'/> | |||

<source dev='/dev/<VOLUME>/<DISK>'/> | |||

<target dev='vda' bus='virtio'/> | |||

</disk> | |||

</syntaxhighlight> | |||

* SATA controller PCI passthrough (mini-SAS) : | |||

<syntaxhighlight lang="xml"> | |||

<hostdev mode='subsystem' type='pci' managed='yes'> | |||

<driver name='vfio'/> | |||

<source> | |||

<address domain='0x0000' bus='0x00' slot='0x13' function='0x0'/> | |||

</source> | |||

</hostdev> | |||

</syntaxhighlight> | |||

== OPNSense == | |||

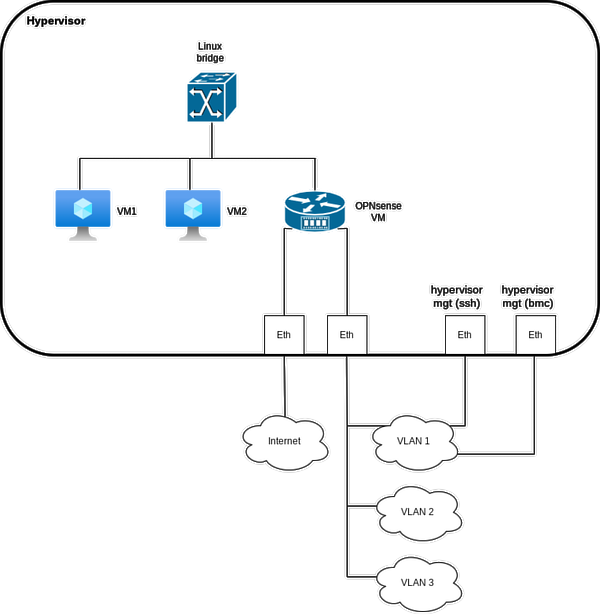

[https://opnsense.org/ OPNSense] (fork of [https://www.pfsense.org/ PFSense]) is another top notch appliance based on FreeBSD but network and firewall oriented this time. Thanks to its four 1 Gb/s Ethernet interfaces, the Supermicro A2SDi-4C-HLN4F motherboard is a perfect candidate to host a router / firewall. | |||

Aimed goal : | |||

[[Fichier:Network.png|center|thumb|600px|Network diagram]] | |||

=== Ethernet PCI passthrough === | |||

While it's not mandatory, PCI passthrough the different Ethernet devices to the OPNSense VM is recommanded, especially if VLANs will be used in conjunction with NAT to give access to Internet. In this case, the MTU will be 1500 all over the networks and no need to tweak the clients OS to lower it. Virtual interfaces requires indeed lowering the MTU with VLANs. | |||

If virtual interfaces are used (to bridge OPNSense with some VMs on the hypervisor, such as a Truenas one for example !), the global setting disabling all hardware offload can be applied. It's possible to override it for specific hardware interfaces (where all offloads are welcome and will work, such as checksum, LRO, TSO or VLAN filtering) | |||

Dernière version du 13 décembre 2022 à 00:32

This howto aims at describing the choices and the build of a compact homelab with a hyperconverged chassis based on a Supermicro A2SDi-4C-HLN4F motherboard. The hypervisor OS will be Slackware64-15.0 (with Qemu/KVM for virtualization), the storage will be provided by a Truenas core VM (thanks to pci-passthrough) and network orchestrated by an OPNSense VM.

Motivations

Why such a motherboard with a modest 4 cores Intel Atom C3558 ? Let's see the advantages :

- obviously, it's very compact (mini-itx form factor)

- up to 256 GB ECC RDIMM RAM supported

- the CPU has a very low TDP (~ 17W), so no need for a fancy and potentially noisy cooling solution

- 4 * 1 Gb/s Ethernet ports (cool for a network appliance such as OPNSense and there's no need for 10 Gb/s for this project)

- dedicated IPMI Ethernet port

- the SATA ports are provided by two distinct PCIe lines (see below, very important for pci-passthrough and no need for an additionnal HBA card)

Well, the system has drawbacks too :

- the CPU power will not be extraordinary

- it's not possible to put a fan directly on top of the CPU heatsink (more on that later)

- no external USB 3.0 ports (ony one "internal", on the motherboard itself) ; this is desirable to plug an external HDD for local backups (USB 2.0 is too slow)

Hardware

2.5 or 3.5 inches drives ?

What is required for this project :

- mini tower format chassis

- Hypervisor OS on soft RAID 1 with two drives

- at least 4 TB of encrypted data with a minimum of resilience (mirror vdevs or raidz1 / raidz2 ...)

- it is recommended not to exceed 80% of the capacity of a ZFS filesystem, so, for 4 TB of usable data, 5 TB of raw capacity is required

- all disks easily accessible from the front of the chassis

Choosing between 2.5 (SFF) or 3.5 (LFF) inches drives is not so easy. Obviously, for raw capacity over a gigabit network, LFF HDD are unbeatable in terms of price. In France (as of march 2021), it costs ~ 80 euros for a 2 TB NAS 3.5 LFF HDD ... Same price for a 1 TB NAS SFF HDD. Moreover, SFF HDD over 2 TB simply doesn't exist for consumer NAS systems (enterprise SFF HDDs exist but are way too expensive). LFF HDD are a clear winner ? What about chassis size, cooling, noise and iops ?

At this point, there are two choices :

- 2 * 500 GB SFF SSD (RAID 1 for hypervisor and VMs OS) plus 2 * 6 TB LFF HDD (mirror vdev storage)

- 2 * 500 GB SFF SSD (RAID 1 for hypervisor and VMs OS) plus 4 * 2 TB SFF SSD (raidz1 storage)

The most reasonable would be to use LFF HDDs but silent operation in a tiny chassis with decent iops are very important, so let's stick with the "unreasonable" configuration !

Cooling the CPU

As seen earlier, a standard CPU fan cannot be fitted on the heat sink. But we are in the 21st century and 3D printing is available, so why not a custom CPU fan shroud ? ^^

Is it absolutely necessary ? I don't think so. Does it help ? Sure ! On a similar system located in an attic (so hot in summer), I attached the measured temperatures over the last months (see below). No more than 47 °C for the CPU ... Without the fan shroud, I think you should expect overall 5 °C more on average.

Final system

- Chieftec mini tower case

- Be Quiet 400W 80plus gold PSU

- Supermicro A2SDi-4C-HLN4F

- 64 GB DDR4 RAM ECC (4 * 16 GB RDIMM)

- ToughArmor MB992SK-B for hypervisor and VMs OS with 2 * WD SA500

- ToughArmor MB994SP-4SB-1 for data storage (with fans off) with 4 * Crucial MX500

- PCIe card with 2 * USB 3.0 ports

Hypervisor installation

BMC setup

First things first, taking control of the BMC. These days, Supermicro's BMC are configured by default for DHCP and dedicated Ethernet port. There is no more the "ADMIN" default password but a random one written on a sticker directly on the motherboard (along with the MAC address of the BMC). Be sure to remember these informations before plugging the dedicated port on a switch of your your LAN.

With the MAC address in mind, it should be easy to retrieve the IP address assigned by the DHCP server, or (better), you could assign a dedicated IP address to your BMC within the DHCP server.

Then I suggest you configure the BMC with a fixed IP address, not an IP attributed by a DHCP server. If your LAN goes berserk and you have to connect to your BMC, it will be easier. Two choices : through the WebUI (example below) or through the UEFI BIOS.

With the BMC configured, you have full control of your system through your favorite Web browser. HTML5 web Console Redirection is a fantastic feature not often available for free with other vendors (such as Dell, HPE or Lenovo for example).

PXE boot

It's of course possible to install your favorite OS by booting thanks to a USB stick. Having already an operational homelab, PXE boot is even easier and quicker. With the Supermicro a2sdi-4c-hln4f, there is just one caveat : no legacy boot available, only UEFI (well, the manual and options within the BIOS suggest legacy should be possible but in reality it's not working at all).

Through the UEFI BIOS options, you should verify the boot order or press F12 during the boot of the system and choose UEFI Network.

Operating system (slackware64-15.0)

Preparations

Let's assume a Slackware Busybox prompt is available (resulting either from booting with a USB key or through network). The soft RAID 1 has to be configured at this stage, of course on the right disks ! (remember, the system is composed of 6 drives, 2 SSDs for OS and 4 SSDs for data).

The lsblk command is a good tool to retrive and identify the drives connected to a system. On the Supermicro a2sdi-4c-hln4f, the 4 drives connected to the mini-SAS port are identified first (sda, sdb, sdc, sdd), the 2 more drives connected to tradtionnal SATA ports come next (sde, sdf). It is on those two drives (sde, sdf) that the soft RAID 1 is going to be configured.

Reference documentation for this kind of setup :

I recommend two identical partitions per disk (sde and sdf) such as :

# fdisk -l /dev/sde

Disk /dev/sde: 465.76 GiB, 500107862016 bytes, 976773168 sectors

Disk model: WDC WDS500G1R0A

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 96BE3679-F270-4EA4-88FF-2935AE4DCB46

Device Start End Sectors Size Type

/dev/sde1 2048 534527 532480 260M EFI System

/dev/sde2 534528 976773134 976238607 465.5G Linux RAID

When you're done with one disk (e.g. sde), you can clone the partionning to another disk (e.g. sdf) with these commands :

sgdisk -R /dev/sdf /dev/sde # clone

sgdisk -G /dev/sdf # set a new GUID

Let's build the soft RAID 1 :

mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sde2 /dev/sdf2

Checking disk synchronization :

watch -n2 cat /proc/mdstat

Saving the RAID configuration for system boot :

mdadm --examine --scan > /etc/mdadm.conf

Using LVM afterwards is a good option :

pvcreate /dev/md0

vgcreate <MY_VOLUME> /dev/md0

lvcreate -L+1g -nswap <MY_VOLUME>

lvcreate -L+4g -nroot <MY_VOLUME>

...

Then you can launch the famous Slackware setup command and proceed through the OS installation itself. Don't forget to create an initrd (thanks to mkinitrd_command_generator.sh which should choose the right options) and be sure to use elilo (or grub) as a bootloader.

Here is my elilo.conf as an example :

chooser=simple

delay=1

timeout=1

#

image=vmlinuz

label=vmlinuz

initrd=initrd.gz

read-only

append="root=/dev/system/root vga=normal ro consoleblank=0 transparent_hugepage=never hugepagesz=1G default_hugepagesz=1G hugepages=60 intel_iommu=on iommu=pt libata.allow_tpm=1 preempt=none"

Little explanations :

- transparent_hugepage=never hugepagesz=1G default_hugepagesz=1G hugepages=60 : 60 hugepages of 1GB of RAM reserved for virtualization

- intel_iommu=on iommu=pt : recommanded option for PCI devices passthrough

- libata.allow_tpm=1 : mandatory option for SATA SED disks (more on that later)

- preempt=none : disable kernel preemption (only really useful for desktop systems)

Booting

It's the tricky part ... Supermicro does not allow a command such as efibootmgr to insert or modify boot options in the UEFI subsystem (as mentioned in a Supermicro FAQ) ... At least if you are using an unsigned Linux kernel and no secure boot. It's technically feasible with Slackware (as explained here) but I never tested it.

I scratched my head a little while on this matter and found a solution online

Virtualization layer

I'm currently using Qemu version 6.2.0 along with Libvirt version 8.1.0. Libvirt is optional (especially if you hate XML ^^) but could be useful with a tool such as virt-manager.

There is no official Slackware packages for Qemu or Libvirt but thanks to slackbuilds.org, you do not have to start from nothing.

I recommand compiling Qemu with io_uring for best I/O performances. The slackbuild will take care of that if the dependency is already installed

Truenas core

I'm a big fan of this network storage appliance system. It's robust (based on FreeBSD and ZFS), well maintained, with a solid community. It's also heavy on ressources (especially RAM, count at least 16GB).

There is an endless debate on whether or not to use ECC RAM ... Facts are :

- it's definitely working without ECC

- you can run into cases such as this incident where ECC RAM prevents your filesystem from being corrupted

Conclusion : use ECC RAM and make backups ^^

Data encryption

Two alternatives identified :

- native ZFS encryption within Truenas

- SED encryption (Crucial MX500 disks are TCG Opal 2.0 compatible)

SED encryption comes with a caveat in the case of PCI passthrough. Disks are indeed activated when the hypervisor starts up, not the Truenas VM ; and it resulted in SED operations (locking / unlocking) not working as intented in Truenas. I decided to use sedutil-cli in the hypervisor, before the Truenas VM starts up (disks are automatically locked when powered off). The operation of unlocking the drives is manual but the password isn't stored inside Truenas.

Command to define a password on a new SED drive :

sedutil-cli --initialsetup <password> <drive>

Script example to unlock the drives :

#!/bin/bash

SEDUTIL="/usr/sbin/sedutil-cli"

echo -n "SED password: "

read -s password

echo

if [ ! -x $SEDUTIL ]; then

echo "no sedutil-cli found"

exit 1

fi

_drives=$($SEDUTIL --scan | awk '$2 == "2" {print $1}')

if [ "x$_drives" = "x" ]; then

echo "no SED drive found"

exit 1

fi

for _drive in $_drives; do

$SEDUTIL --setLockingRange 0 RW $password $_drive

$SEDUTIL --setMBRDone on $password $_drive

done

But why using SED encryption in the first place ? Well, as mentioned earlier, the C3558 is a modest CPU and even if AES-NI instructions are available, I wanted to spare extra cycles. Moreover, I'm not paranoid about data encryption and I think SED is a sweet spot between performance and security. I ran a little bench with fio to illustrate this :

| RAIDZ (no crypto) | RAIDZ (zfs crypto) | RAIDZ (SED crypto) | ||||

|---|---|---|---|---|---|---|

| VCPUs | VCPUs | VCPUs | ||||

| 2 | 3 | 2 | 3 | 2 | 3 | |

| sequential write (MB/s) | 178 | 264 | 153 | 222 | 245 | |

| sequential read (MB/s) | 442 | 288 | 436 | |||

More CPU power is needed for SSDs to realize their full potential. But this particular setup cosumes ... 38W at full speed (it's powered on 24/7) and I just need to saturate a 1 Gb/s network.

Libvirt XML configuration

Special points of attention.

- Using huge pages for memory :

<memoryBacking>

<hugepages/>

</memoryBacking>

- Using io_uring for Truenas boot disk :

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='io_uring'/>

<source dev='/dev/<VOLUME>/<DISK>'/>

<target dev='vda' bus='virtio'/>

</disk>

- SATA controller PCI passthrough (mini-SAS) :

<hostdev mode='subsystem' type='pci' managed='yes'>

<driver name='vfio'/>

<source>

<address domain='0x0000' bus='0x00' slot='0x13' function='0x0'/>

</source>

</hostdev>

OPNSense

OPNSense (fork of PFSense) is another top notch appliance based on FreeBSD but network and firewall oriented this time. Thanks to its four 1 Gb/s Ethernet interfaces, the Supermicro A2SDi-4C-HLN4F motherboard is a perfect candidate to host a router / firewall.

Aimed goal :

Ethernet PCI passthrough

While it's not mandatory, PCI passthrough the different Ethernet devices to the OPNSense VM is recommanded, especially if VLANs will be used in conjunction with NAT to give access to Internet. In this case, the MTU will be 1500 all over the networks and no need to tweak the clients OS to lower it. Virtual interfaces requires indeed lowering the MTU with VLANs.

If virtual interfaces are used (to bridge OPNSense with some VMs on the hypervisor, such as a Truenas one for example !), the global setting disabling all hardware offload can be applied. It's possible to override it for specific hardware interfaces (where all offloads are welcome and will work, such as checksum, LRO, TSO or VLAN filtering)