« Lab architecture » : différence entre les versions

Sauter à la navigation

Sauter à la recherche

Aucun résumé des modifications |

Aucun résumé des modifications |

||

| (Une version intermédiaire par le même utilisateur non affichée) | |||

| Ligne 29 : | Ligne 29 : | ||

* Availability Zone 1 : | * Availability Zone 1 : | ||

** HP Elitedesk 800 g5 mini (Intel Core i5-9500, 64 GB RAM DDR4, 500 | ** HP Elitedesk 800 g5 mini (Intel Core i5-9500, 64 GB RAM DDR4, 500 GB NVME) | ||

*** 3 VMs acting as baremetal nodes (1 vCPU, 4 GB RAM, 20 GB disk for each VM) | *** 3 VMs acting as baremetal nodes (1 vCPU, 4 GB RAM, 20 GB disk for each VM) | ||

*** 2 VMs for Openstack controllers (2 vCPUs, 16 GB RAM, 40 GB disk for each VM) | *** 2 VMs for Openstack controllers (2 vCPUs, 16 GB RAM, 40 GB disk for each VM) | ||

| Ligne 38 : | Ligne 38 : | ||

* Availability Zone 2: | * Availability Zone 2: | ||

** HP Elitedesk 800 g2 mini (Intel Core i5-6500T, 32 GB RAM DDR4, 500 | ** HP Elitedesk 800 g2 mini (Intel Core i5-6500T, 32 GB RAM DDR4, 500 GB NVME) | ||

*** 1 VM for Openstack cell controller (1 vCPU, 2 GB RAM, 20 GB disk) | *** 1 VM for Openstack cell controller (1 vCPU, 2 GB RAM, 20 GB disk) | ||

*** 1 VM for Openstack network controller (1 vCPU, 2 GB RAM, 20 GB disk) | *** 1 VM for Openstack network controller (1 vCPU, 2 GB RAM, 20 GB disk) | ||

| Ligne 45 : | Ligne 45 : | ||

<br /> | <br /> | ||

The bare metal nodes are "simulated" thanks to [https:// | The bare metal nodes are "simulated" thanks to [https://docs.openstack.org/virtualbmc/latest/index.html Virtual BMC] and [https://docs.openstack.org/sushy-tools/latest/index.html Sushy-tools]. Ironic uses among others the IPMI or Redfish protocol to turn on/off/change the boot order of nodes. | ||

<br /> | <br /> | ||

[[Fichier:VM distribution.png|900px|vignette|centré|VM distribution]] | [[Fichier:VM distribution.png|900px|vignette|centré|VM distribution]] | ||

Dernière version du 10 mars 2023 à 13:52

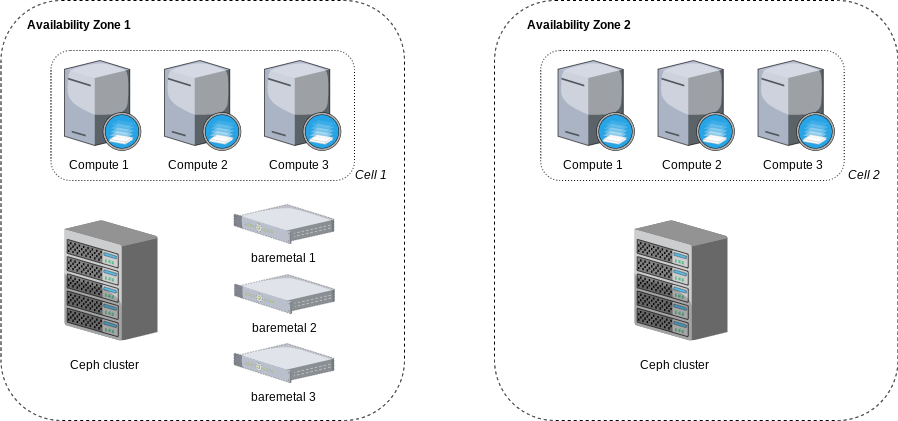

One region, two availability zones

This Openstack Lab is composed of one region (fr1) and two availability zones (az1 and az2).

In the first availability zone :

- 3 compute nodes (aka hypervisors) for Nova wihtin a Nova Cell called cell1

- 1 Ceph cluster for Cinder and Glance

- 3 bare metal nodes for Ironic

In the second availability zone :

- 3 compute nodes wihtin a Nova Cell called cell2

- 1 Ceph cluster (Cinder only)

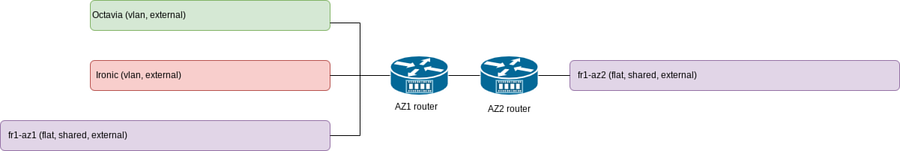

Networks

The different networks are :

- fr1-az1 : provider network in AZ1 (flat, i.e. without VLAN, shared, i.e. available for everybody and external, i.e. managed outside Openstack)

- fr1-az2 : provider network in AZ2

- ironic : external VLAN for bare metal nodes (only available in AZ1)

- octavia : external VLAN for Octavia management (only available in AZ1)

Resources

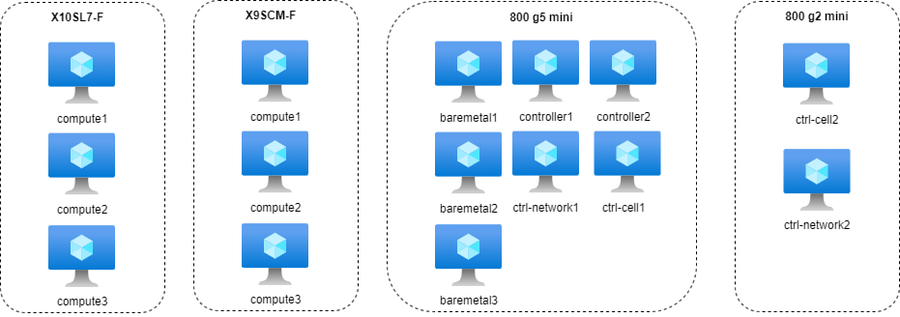

- Availability Zone 1 :

- HP Elitedesk 800 g5 mini (Intel Core i5-9500, 64 GB RAM DDR4, 500 GB NVME)

- 3 VMs acting as baremetal nodes (1 vCPU, 4 GB RAM, 20 GB disk for each VM)

- 2 VMs for Openstack controllers (2 vCPUs, 16 GB RAM, 40 GB disk for each VM)

- 1 VM for Openstack cell controller (1 vCPU, 2 GB RAM, 20 GB disk)

- 1 VM for Openstack network controller (1 vCPU, 2 GB RAM, 20 GB disk)

- Supermicro X9SCM-F (Intel Xeon E3-1265L v2, 32 GB RAM ECC DDR3)

- 3 VMs acting as hyperconverged nodes (compute + Ceph RBD storage). Each VM has 10 GB of RAM, 2 vCPUs, one virtual disk for OS and one virtual disk for Ceph OSD

- HP Elitedesk 800 g5 mini (Intel Core i5-9500, 64 GB RAM DDR4, 500 GB NVME)

- Availability Zone 2:

- HP Elitedesk 800 g2 mini (Intel Core i5-6500T, 32 GB RAM DDR4, 500 GB NVME)

- 1 VM for Openstack cell controller (1 vCPU, 2 GB RAM, 20 GB disk)

- 1 VM for Openstack network controller (1 vCPU, 2 GB RAM, 20 GB disk)

- Supermicro X10SL7-F (Intel Xeon E3-1265L v3, 32 GB RAM ECC DDR3)

- 3 VMs acting as hyperconverged nodes (compute + Ceph RBD storage). Each VM has 10 GB of RAM, 2 vCPUs, one virtual disk for OS and one virtual disk for Ceph OSD

- HP Elitedesk 800 g2 mini (Intel Core i5-6500T, 32 GB RAM DDR4, 500 GB NVME)

The bare metal nodes are "simulated" thanks to Virtual BMC and Sushy-tools. Ironic uses among others the IPMI or Redfish protocol to turn on/off/change the boot order of nodes.