« Blazar » : différence entre les versions

Aucun résumé des modifications |

|||

| (5 versions intermédiaires par le même utilisateur non affichées) | |||

| Ligne 3 : | Ligne 3 : | ||

== Concept == | == Concept == | ||

Imagine this situation : you | Imagine this situation : you provide two services, one with high priority, the other one with low priority. During the week, each service runs VMs on multiple node with auto-scaling capability (i.e. another VMs are automatically provisionned if the workload increases). You know that during the week-end, your higher priority service will have more demand and you don't want your lower priority service starving it from resources.<br> | ||

Blazar brings the possibility to reserve dedicated compute nodes for your high prority service for a time period, say, the week-end.<br> | Blazar brings the possibility to reserve dedicated compute nodes for your high prority service for a time period, say, the week-end.<br> | ||

Best of all, unreserved compute nodes managed by Blazar can run preemptible VMs, avoiding unused computing power. | Best of all, unreserved compute nodes managed by Blazar can run preemptible VMs, avoiding unused computing power. | ||

| Ligne 11 : | Ligne 11 : | ||

[https://docs.openstack.org/kolla-ansible/latest/ Kolla-ansible] is used to deploy our private cloud. | [https://docs.openstack.org/kolla-ansible/latest/ Kolla-ansible] is used to deploy our private cloud. | ||

=== Enable ''Blazar'' in ''globals.yml'' === | === Enable ''Blazar'' in ''/etc/kolla/globals.yml'' === | ||

<syntaxhighlight lang="yaml"> | <syntaxhighlight lang="yaml"> | ||

enable_blazar: "yes" | enable_blazar: "yes" | ||

kolla_enable_tls_internal: " | kolla_enable_tls_internal: "yes" | ||

kolla_enable_tls_backend: " | kolla_enable_tls_backend: "yes" | ||

</syntaxhighlight> | </syntaxhighlight> | ||

If TLS is enabled, on the Ansible deploy host, you have to put a *cafile* option in ''/etc/kolla/config/blazar.conf'' : | |||

<syntaxhighlight lang="yaml"> | |||

[DEFAULT] | |||

cafile = /etc/pki/ca-trust/source/anchors/kolla-customca-root.crt | |||

</syntaxhighlight> | |||

=== Activate ''preemptible instances'' in ''nova-scheduler'' === | === Activate ''preemptible instances'' and ''BlazarFilter'' in ''nova-scheduler'' === | ||

On the Ansible deploy host, the file should be ''config/nova/nova-scheduler.conf'' | On the Ansible deploy host, the file should be ''/etc/kolla/config/nova/nova-scheduler.conf'' | ||

<syntaxhighlight lang="yaml"> | <syntaxhighlight lang="yaml"> | ||

[filter_scheduler] | |||

available_filters = nova.scheduler.filters.all_filters | |||

available_filters = blazarnova.scheduler.filters.blazar_filter.BlazarFilter | |||

enabled_filters = <list_of_other_filters>,BlazarFilter | |||

[blazar:physical:host] | [blazar:physical:host] | ||

allow_preemptibles = true | allow_preemptibles = true | ||

</syntaxhighlight> | |||

=== Deploy === | |||

<syntaxhighlight lang="bash"> | |||

kolla-ansible -i <inventory_file> deploy | |||

</syntaxhighlight> | </syntaxhighlight> | ||

Dernière version du 18 septembre 2024 à 16:47

Let's experiment Blazar, Openstack dedicated service for resource reservation.

Concept

Imagine this situation : you provide two services, one with high priority, the other one with low priority. During the week, each service runs VMs on multiple node with auto-scaling capability (i.e. another VMs are automatically provisionned if the workload increases). You know that during the week-end, your higher priority service will have more demand and you don't want your lower priority service starving it from resources.

Blazar brings the possibility to reserve dedicated compute nodes for your high prority service for a time period, say, the week-end.

Best of all, unreserved compute nodes managed by Blazar can run preemptible VMs, avoiding unused computing power.

Installation / Configuration

Kolla-ansible is used to deploy our private cloud.

Enable Blazar in /etc/kolla/globals.yml

enable_blazar: "yes"

kolla_enable_tls_internal: "yes"

kolla_enable_tls_backend: "yes"

If TLS is enabled, on the Ansible deploy host, you have to put a *cafile* option in /etc/kolla/config/blazar.conf :

[DEFAULT]

cafile = /etc/pki/ca-trust/source/anchors/kolla-customca-root.crt

Activate preemptible instances and BlazarFilter in nova-scheduler

On the Ansible deploy host, the file should be /etc/kolla/config/nova/nova-scheduler.conf

[filter_scheduler]

available_filters = nova.scheduler.filters.all_filters

available_filters = blazarnova.scheduler.filters.blazar_filter.BlazarFilter

enabled_filters = <list_of_other_filters>,BlazarFilter

[blazar:physical:host]

allow_preemptibles = true

Deploy

kolla-ansible -i <inventory_file> deploy

Create a dedicated flavor for preemptible instances

For the sake of simplicity, only two flavors are going to be used :

- a "normal" (no preemption)

- a preemptible

# normal flavor

openstack flavor create --id 1 --ram 512 --disk 1 --vcpus 1 g1

# preemptible flavor

openstack flavor create --id 2 --ram 512 --disk 1 --vcpus 1 --property blazar:preemptible=true g1.preempt

Action

Book hosts for blazar

The hosts you want to reserve for blazar must not belong to aggregates.

openstack reservation host create compute1.cloud.ld

openstack reservation host create compute4.cloud.ld

openstack reservation host list

+----+---------------------+-------+-----------+----------+

| id | hypervisor_hostname | vcpus | memory_mb | local_gb |

+----+---------------------+-------+-----------+----------+

| 8 | compute1.cloud.ld | 2 | 9664 | 52 |

| 4 | compute4.cloud.ld | 2 | 5632 | 22 |

+----+---------------------+-------+-----------+----------+

Create some instances

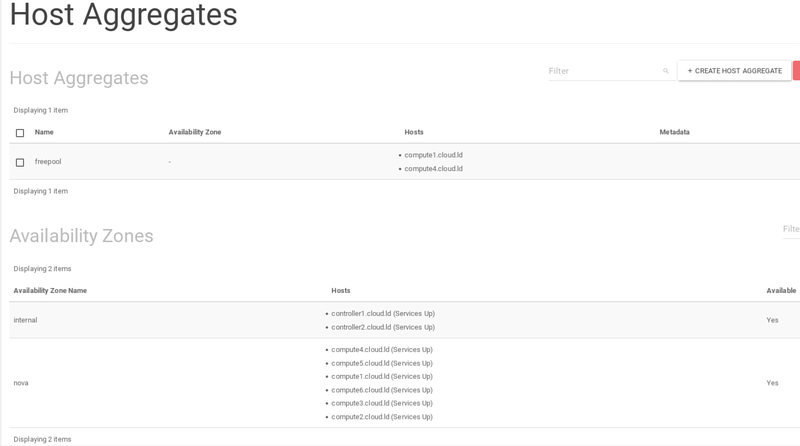

Creation of 8 instances : one "normal" and seven "preemptible"

openstack server create --image cirros --flavor g1 --network Ext-Net vm1

for i in $(seq 2 8); do

openstack server create --image cirros --flavor g1.preempt --network Ext-Net vm${i}

done

Notes :

- Preemptible instances are distributed across compute1 and compute4

- The normal instance is hosted by a compute node not managed by Blazar

Make a reservation

Reservation of one compute node for 20 minutes in 5 minutes from now. The compute node should have at leat 2 CPUs. The reservation is called lease-1 :

openstack reservation lease create \

--reservation resource_type=physical:host,min=1,max=1,hypervisor_properties='[">=", "$vcpus", "2"]' \

--start-date "$(date --date '+5 min' +"%Y-%m-%d %H:%M")" \

--end-date "$(date --date '+20 min' +"%Y-%m-%d %H:%M")" lease-1

+--------------+-------------------------------------------------------------+

| Field | Value |

+--------------+-------------------------------------------------------------+

| created_at | 2024-01-21 22:00:57 |

| degraded | False |

| end_date | 2024-01-21T22:20:00.000000 |

| events | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": null, |

| | "id": "1b4700d4-960d-46c0-8022-af743bd778ce", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "end_lease", |

| | "time": "2024-01-21T22:20:00.000000", |

| | "status": "UNDONE" |

| | } |

| | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": null, |

| | "id": "3f8a4928-a360-495d-826d-4b92a5b7a003", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "start_lease", |

| | "time": "2024-01-21T22:05:00.000000", |

| | "status": "UNDONE" |

| | } |

| | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": null, |

| | "id": "564bd93c-e157-4dcb-825a-2258d882a4d2", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "before_end_lease", |

| | "time": "2024-01-21T22:05:00.000000", |

| | "status": "UNDONE" |

| | } |

| id | 7180e34e-2718-47c9-8616-590e6a3585b2 |

| name | lease-1 |

| project_id | e1bbe999a4a84d91bbf79eaf54adc60d |

| reservations | { |

| | "created_at": "2024-01-21 22:00:57", |

| | "updated_at": "2024-01-21 22:00:58", |

| | "id": "e379e7c5-b708-4822-80a3-d9e9a0c573a7", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "resource_id": "80c24e3a-beb5-4f72-a566-31a1be252ba5", |

| | "resource_type": "physical:host", |

| | "status": "pending", |

| | "missing_resources": false, |

| | "resources_changed": false, |

| | "hypervisor_properties": "[\">=\", \"$vcpus\", \"2\"]", |

| | "resource_properties": "", |

| | "before_end": "default", |

| | "min": 1, |

| | "max": 1 |

| | } |

| start_date | 2024-01-21T22:05:00.000000 |

| status | PENDING |

| trust_id | 9e5864d9805b4ff1bb452730fef859ab |

| updated_at | 2024-01-21 22:00:58 |

| user_id | e2cde8831daf4285b148499159c4c14e |

+--------------+-------------------------------------------------------------+

Note the reservation id for future use : e379e7c5-b708-4822-80a3-d9e9a0c573a7

The reservation is PENDING, i.e. not ACTIVE yet.

After a few minutes, the reservation becomes ACTIVE :

openstack reservation lease show lease-1

+--------------+-------------------------------------------------------------+

| Field | Value |

+--------------+-------------------------------------------------------------+

| created_at | 2024-01-21 22:00:57 |

| degraded | False |

| end_date | 2024-01-21T22:20:00.000000 |

| events | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": null, |

| | "id": "1b4700d4-960d-46c0-8022-af743bd778ce", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "end_lease", |

| | "time": "2024-01-21T22:20:00.000000", |

| | "status": "UNDONE" |

| | } |

| | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": "2024-01-21 22:05:11", |

| | "id": "3f8a4928-a360-495d-826d-4b92a5b7a003", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "start_lease", |

| | "time": "2024-01-21T22:05:00.000000", |

| | "status": "DONE" |

| | } |

| | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": "2024-01-21 22:05:12", |

| | "id": "564bd93c-e157-4dcb-825a-2258d882a4d2", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "before_end_lease", |

| | "time": "2024-01-21T22:05:00.000000", |

| | "status": "DONE" |

| | } |

| id | 7180e34e-2718-47c9-8616-590e6a3585b2 |

| name | lease-1 |

| project_id | e1bbe999a4a84d91bbf79eaf54adc60d |

| reservations | { |

| | "created_at": "2024-01-21 22:00:57", |

| | "updated_at": "2024-01-21 22:05:11", |

| | "id": "e379e7c5-b708-4822-80a3-d9e9a0c573a7", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "resource_id": "80c24e3a-beb5-4f72-a566-31a1be252ba5", |

| | "resource_type": "physical:host", |

| | "status": "active", |

| | "missing_resources": false, |

| | "resources_changed": false, |

| | "hypervisor_properties": "[\">=\", \"$vcpus\", \"2\"]", |

| | "resource_properties": "", |

| | "before_end": "default", |

| | "min": 1, |

| | "max": 1 |

| | } |

| start_date | 2024-01-21T22:05:00.000000 |

| status | ACTIVE |

| trust_id | 9e5864d9805b4ff1bb452730fef859ab |

| updated_at | 2024-01-21 22:05:11 |

| user_id | e2cde8831daf4285b148499159c4c14e |

+--------------+-------------------------------------------------------------+

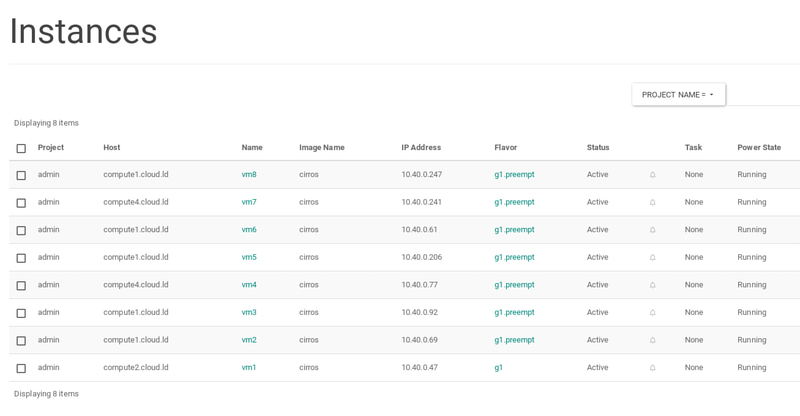

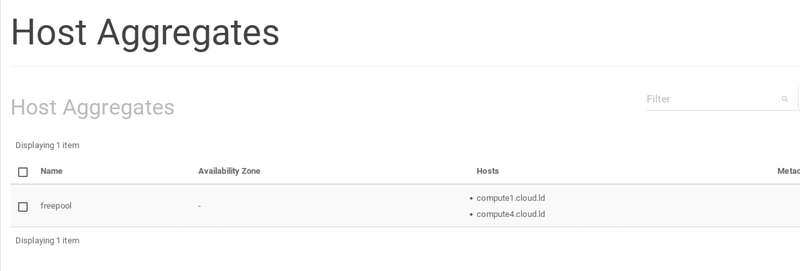

Let's see the impact on host aggregates with Horizon (compute4.cloud.ld is now reserved and belongs to a special aggregate created for the purpose) :

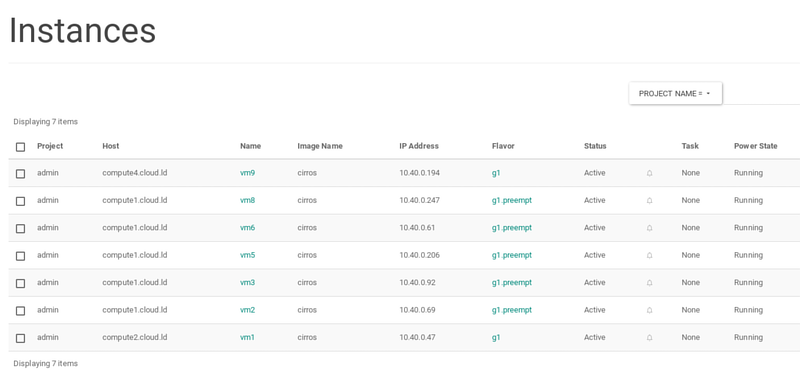

The preemptibles instances on compute4.cloud.ld are gone :

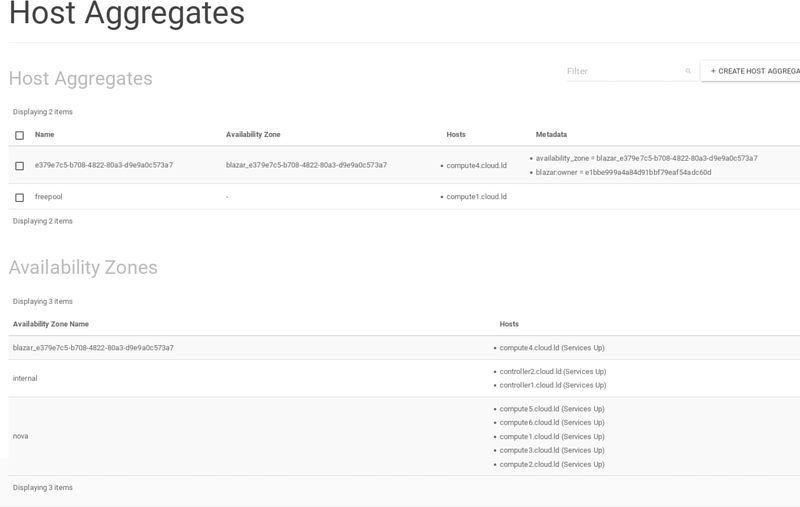

Let's create an instance with our reservation id :

openstack server create --image cirros --flavor g1 --network Ext-Net --hint reservation=e379e7c5-b708-4822-80a3-d9e9a0c573a7 vm9

And verify the result with Horizon (compute4.cloud.ld is now hosting vm9) :

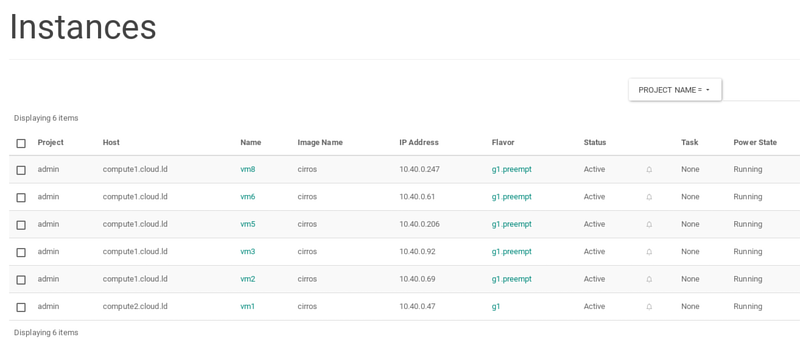

At the end of the reservation, lease-1 becomes TERMINATED :

openstack reservation lease show lease-1

+--------------+-------------------------------------------------------------+

| Field | Value |

+--------------+-------------------------------------------------------------+

| created_at | 2024-01-21 22:00:57 |

| degraded | False |

| end_date | 2024-01-21T22:20:00.000000 |

| events | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": "2024-01-21 22:20:11", |

| | "id": "1b4700d4-960d-46c0-8022-af743bd778ce", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "end_lease", |

| | "time": "2024-01-21T22:20:00.000000", |

| | "status": "DONE" |

| | } |

| | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": "2024-01-21 22:05:11", |

| | "id": "3f8a4928-a360-495d-826d-4b92a5b7a003", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "start_lease", |

| | "time": "2024-01-21T22:05:00.000000", |

| | "status": "DONE" |

| | } |

| | { |

| | "created_at": "2024-01-21 22:00:58", |

| | "updated_at": "2024-01-21 22:05:12", |

| | "id": "564bd93c-e157-4dcb-825a-2258d882a4d2", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "event_type": "before_end_lease", |

| | "time": "2024-01-21T22:05:00.000000", |

| | "status": "DONE" |

| | } |

| id | 7180e34e-2718-47c9-8616-590e6a3585b2 |

| name | lease-1 |

| project_id | e1bbe999a4a84d91bbf79eaf54adc60d |

| reservations | { |

| | "created_at": "2024-01-21 22:00:57", |

| | "updated_at": "2024-01-21 22:20:11", |

| | "id": "e379e7c5-b708-4822-80a3-d9e9a0c573a7", |

| | "lease_id": "7180e34e-2718-47c9-8616-590e6a3585b2", |

| | "resource_id": "80c24e3a-beb5-4f72-a566-31a1be252ba5", |

| | "resource_type": "physical:host", |

| | "status": "deleted", |

| | "missing_resources": false, |

| | "resources_changed": false, |

| | "hypervisor_properties": "[\">=\", \"$vcpus\", \"2\"]", |

| | "resource_properties": "", |

| | "before_end": "default", |

| | "min": 1, |

| | "max": 1 |

| | } |

| start_date | 2024-01-21T22:05:00.000000 |

| status | TERMINATED |

| trust_id | 9e5864d9805b4ff1bb452730fef859ab |

| updated_at | 2024-01-21 22:20:11 |

| user_id | e2cde8831daf4285b148499159c4c14e |

+--------------+-------------------------------------------------------------+

And compute4.cloud.ld returns to the freepool aggregate :